Yesterday, I posted about a little thought experiment game I’d come up with to look into risk-reward decisions in multiplayer games.

In the game, each player in turn picks a number, from 1 up to the highest number on whatever die is being used. Then everyone rolls, trying to get their number or higher. Out of those who succeeded, the one who picked the highest number (i.e. who took the biggest risk) wins. If everyone fails, they all reroll until at least one person succeeds.

It’s easy enough to work out some basic results for the two-player version on paper. Yesterday, I posed six questions of increasing difficulty to be answered, whether mathematically or simple guesswork. Here they are again, now with the answers.

In general, is it better for for the first player if the die has more sides, or fewer?

More sides. The easiest way to see this is to consider what advantage each player has. The first player’s advantage is having first pick of the numbers; if one number is better than any of the others, he’ll actually be better off than the second. But the second player’s advantage is that of controlling the relative values of the two players’ numbers. The second player’s advantage grows with the number of sides on the die (as he has finer control), while the first player’s diminishes, as the difference in probabilities for one number and its adjacent numbers grows smaller.

Is there a rule of thumb for perfect play? How should the two players decide which numbers to take?

Well, things are pretty simple for the second player; either he should choose the next number higher than his opponent, or he should choose 1. There’s clearly no reason to go more than 1 higher than the opponent, as this only diminishes his own odds without added benefit. Likewise, if he chooses to play it safe than the opponent, there’s no reason not to just play it as safely as possible. If the first player chooses wisely, it may not be trivial for the second player to pick which of these two moves to make, but the range of choice is still a lot narrower, so it’s easier to make the right call.

How about for the first player? Well, his goal should probably be to make life as hard as possible for his opponent, putting him in a situation where it’s neither of his two options is much better than the other. It’s easy to see that choices at the two extremes are no good, so the right answer is likely to fall close to the middle of the spectrum. Intuitively, one might guess that it should be the number that gives a 50/50 shot, but this turns out not to be correct, except for dice with few sides.

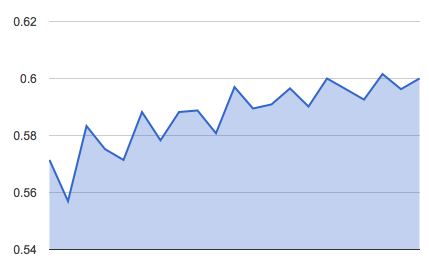

As you move to dice with more and more sides, you can find a cubic equation that gives you the result that the ideal probability the first player is looking for is 1.5 – sqrt(1.25), or about 0.381966. So, for instance, on a 100-sided die, the number to pick is 62 (which way to round is tougher, and beyond my capabilities, but brute forcing it numerically gives the result that 62 is better than 63 in that situation).

The graph to the right shows the exact probabilities of hitting the numbers ideally chosen by the first player for dice ranging from 3 up to 100 sides.

Are there any die sizes for which it’s better to pick first? How many, and which one(s)?

Only one – the three-sided die. There, the first player obviously chooses 2. The second player’s best choice is to gamble for a 3, but this still gives the first player a 57.2% chance of a win, because the difference in likelihood between the 1/3 and 2/3 shot is sufficient to overcome the advantage the second player has of winning when both succeed.

Are there any die sizes for which it doesn’t matter who picks first? How many, and which one(s)?

Two, four and six sides. Of these, the interesting one is the six-sided die – and particularly so because it’s the one we use most commonly! In the case of a two-sided die (a coin), it’s obvious that the odds are 50/50 regardless of who picks what. In the case of a four-sided die, the first player picks 3, and like the three-sided die, the odds aren’t good enough for the second player to come out ahead by picking 4… but unlike the three-sided die, he can pick 1 and come out even, since the first player will roll 3-4 exactly 50% of the time.

The case of the six-sided die is interesting because the second player actually has two equal options. The first player should choose 4, thus the second player can again go for 1, counting on the 50% chance of his opponent failing. But instead, the second player can also pick 5. This is slightly less obvious to work out, but has a neat symmetry to it: 1/3 of the time, the second player will hit his 5-6 and win regardless of what the first player rolled. Of the remaining 2/3, the first player will win 1/2 the time by hitting his 4-6, i.e a total of 1/3 of the time as well. The remaining 1/3 of the time, we have to roll again, but since the odds are balanced on each roll, we don’t have to work out the whole series to know that the total odds come out to 50/50.

In general, is it better for the first player if the die has an odd number of sides, or an even number?

This was an inadvertent trick question, based on flawed assumptions I’d made at the time of the last post. Looking at the numbers, there seems to be no pattern to whether a given die will be better or worse for one player depending on whether its sides are odd or even. To the right is a graph of the second player’s odds of winning, for 10- to 30-sided dice. Unlike the previous graph, we can see that the zig-zags do not follow a regular pattern.

Assuming perfect play, what’s the limit to how big an advantage the second player can have (for any die size)?

For this, we have to go back to the second question, and our realization that the best strategy for the first player is to leave the second with two options that are as close to equivalent as possible.

As the number of sides grows huge, the difference in the probability of rolling at least some number M and the probability of rolling at least M+1 becomes very small. Thus, if the second player chooses to take the number one higher than the first player, it becomes as if both are rolling for the same target, except that the second player wins if they both get it. If you think about it for a moment, you realize that this is exactly the same as a game in which they take turns rolling, and the first player to hit the number wins, with the second player having the advantage of the first roll. (That is, if the second player’s first roll hits, he wins, and the first player’s roll doesn’t count. If he misses, though, then we look at the first player’s first roll. If that hits, then the second player doesn’t get a second roll, and so on.)

So, we write the probability of the second player winning recursively as:

W = p + (1-p)^2*W

Where p is the probability of a single player hitting his target number, (1-p)^2 is the probability of both missing, and W is the total probability of winning (which appears on the right side of the equation because it remains unchanged if both win).

Now, obviously, if the second player chooses 1 instead, then his chances of winning are just (1-p), since he wins if the first player misses, and loses otherwise. Since we want to make it so that the second player can’t gain any greater advantage whichever he chooses, we can then set W = (1-p), giving us:

(1-p) = p + (1-p)^3

Which is the cubic equation I mentioned earlier, and whose solution within the range 0 < p < 1 is about 0.381966. Since the second players' odds are 1 minus this, as the number of sides on the die gets larger and larger, the second player's odds converge on 0.618034 which, using betting odds, means he's a 1.62-1 favorite over the first player.

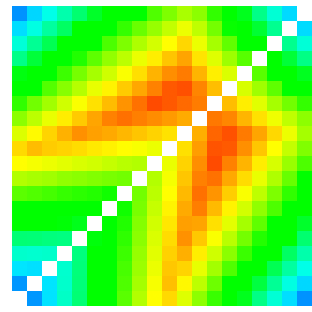

Now, I promised some results for a multiplayer version of the game, but everything turned out to be much more complicated than I’d thought. I have the results, but this post is already very long, so I will again keep you hanging until tomorrow. As a sneak preview, though, here is a graphic I created, showing the odds of winning for the third player’s best choice, given the first and second player’s choices in a game with a 20-sided die. Bluer colors represent better chances for the third player; pure red would be exactly 1/3 chance, but for this size of die, one of the results I found is that the third player ALWAYS has an advantage, regardless of how the other two attempt to conspire against him.